Chapter 1

Review of Serverless Development on AWS by Sheen Brisals and Luke Hedger

Chapter 1: Introduction to Serverless on AWS

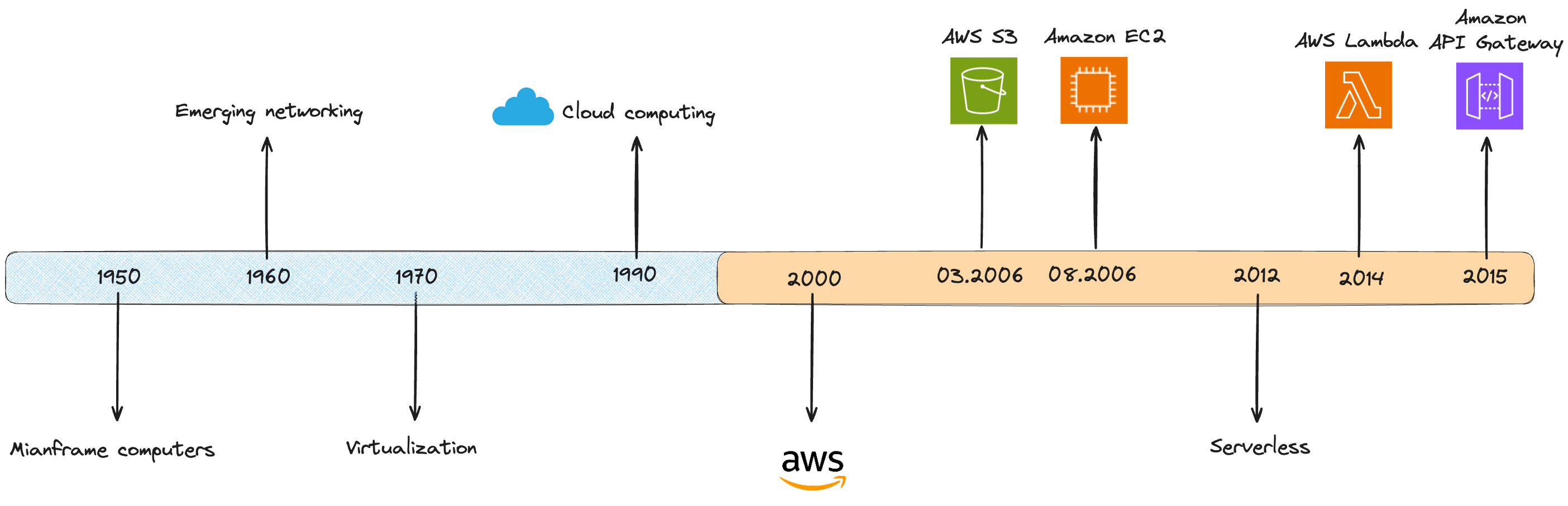

The book begins by exploring the evolution from traditional enterprise computing to the AWS cloud revolution.

In the cloud era, a key paradigm shift is the concept of running everything as a service. This idea is particularly intriguing. While I’m already familiar with traditional cloud service models—such as Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS)—this broader perspective adds a new layer of understanding.

Take databases, for example. Running a database as a service (DBaaS) is a form of SaaS that abstracts away the complexity of data storage and operations. Another crucial concept is Function as a Service (FaaS). With FaaS, you can execute your code without worrying about the underlying infrastructure, operating system, or runtime environment. You simply write the code, and the cloud provider runs it. This marks the beginning of the serverless journey in the cloud.

The book distinguishes between two types of serverless services: managed and fully managed.

For instance, as a developer, when you consume APIs from third-party vendors, you typically don’t have access to the implementation details or operational management. These aspects are handled by the provider—this is an example of a managed service.

In the AWS ecosystem, services like Amazon RDS are considered managed services. However, you still need to configure certain elements such as VPCs, security groups, and instance types. In contrast, services like DynamoDB are fully managed—they offer a higher level of abstraction and require minimal setup. You can start using them almost instantly with sensible defaults. These fully managed services are often referred to as serverless services.

Characteristics of Serverless

-

Pay-per-use: This is slightly different from the general pay-as-you-go model. Pay-per-use means you are charged only for each individual function invocation, rather than for allocated infrastructure, regardless of usage.

-

Autoscaling and Scale to Zero: Autoscaling is a key feature of cloud computing. However, the ability to scale to zero is unique to serverless. For example, with AWS Lambda, when a function finishes executing and remains idle, the number of active instances automatically scales down to zero, meaning you incur no cost while it’s not in use.

-

High Availability: High availability is built into fully managed services. Running your workloads on serverless platforms ensures high availability out of the box, without the need for manual configuration.

-

Cold Starts: Cold start is a behavior specific to serverless environments. When a Lambda function is idle for a certain period, the execution environment is shut down to save resources. The next time the function is invoked, AWS must provision a new runtime environment, which introduces a small delay—this is referred to as a cold start.

This chapter also introduces some of the unique advantages of serverless.

One notable concept is individuality. In serverless architectures, each function or service can be configured and optimized independently. The idea that “one size fits all” does not apply. Depending on business requirements, one function can be tuned for performance, while another can be optimized for cost-efficiency.

Serverless also enables fine-grained control. Each resource can be adjusted based on specific business or operational needs—this is referred to as resource granularity.

From a security standpoint, serverless promotes least privilege access. You can assign the minimum permissions required for each function to complete its task, enhancing security and privacy at the function level.

I particularly like the concept: start simple and scale fast. Serverless supports incremental and iterative development, and event-driven architecture (EDA) lies at its core.

Beyond the technical benefits, the book emphasizes that now is the time for businesses to start adopting serverless. However, successful adoption requires a capable engineering team. Becoming a proficient serverless engineer involves acquiring a new skill set and embracing a DevOps mindset.

The authors describe serverless as a technology ecosystem. I was reminded of the ecosystems I studied in high school science—systems that include both living and non-living factors. Similarly, in serverless computing, you cannot run an application on a single service alone. Many interconnected components work together to form a complete solution.

One interesting detail I learned in this chapter: Have you ever noticed that some AWS services start with the “AWS” prefix while others begin with “Amazon”? According to the book, AWS originally used a naming convention: services with the “Amazon” prefix are typically standalone (e.g., Amazon DynamoDB), whereas those with the “AWS” prefix support or integrate with other services (e.g., AWS Step Functions). However, it’s unclear whether this convention still holds, as services continue to evolve.

Each chapter ends with an expert interview. Chapter 1 features an interview with Danilo Poccia, Chief Evangelist at Amazon Web Services.